Intelligent Chatbot for Company-Specific Information Using AWS Services

This case study demonstrates the implementation of a scalable and secure chatbot using AWS services, including Lambda, Bedrock, and Aurora, to provide accurate, context-specific responses based on internal company documents. The solution enables rapid deployment, automatic knowledge base updates, and seamless scalability to handle varying query loads.

2/4/20255 min read

Intelligent Chatbot for Company-Specific Information Using AWS Services

Client Overview: The client needed to deploy a specialized chatbot that could efficiently respond to user queries based on the company’s internal documents, such as service manuals, policy documents, and product specifications. The challenge was to build an intelligent, scalable, and secure system that could automatically update its knowledge base with the latest company information and handle user queries in real time.

Problem Statement:

The client faced several challenges in deploying an intelligent chatbot:

Complex Infrastructure Setup: Setting up the necessary cloud infrastructure, including storage, databases, and processing functions, across multiple AWS services was time-consuming and error-prone.

Extended Deployment Timelines: Traditional deployment methods for specialized chatbot applications could take weeks or months, delaying access to critical business information.

Knowledge Base Updates: Frequent updates to the company’s services and products meant the chatbot needed to rapidly learn new information to avoid providing outdated responses.

Scalability and Maintenance: Ensuring the chatbot could scale to handle varying query loads while maintaining the infrastructure required significant technical expertise and resources.

Solution Overview:

To address these challenges, the client adopted a serverless, automated deployment model leveraging AWS services like AWS Bedrock, Amazon S3, Amazon Aurora, Lambda, and API Gateway. The chatbot was designed to answer user queries based on internal company documents, such as PDFs, and was capable of automatically updating its knowledge base with new or modified content.

The solution utilized Terraform as the Infrastructure as Code (IaC) tool to automate the deployment process, drastically reducing deployment time and ensuring consistency across different environments.

Solution Description:

Terraform as IaC Tool:

Terraform was used to automate the provisioning and setup of infrastructure, significantly reducing the time required to deploy the solution. By defining infrastructure in code, Terraform ensured that the setup was repeatable, consistent, and scalable.

AWS Bedrock for Knowledge Base:

AWS Bedrock was utilized to provide various AI models and foundation models to populate a vector database based on company-provided data sources. It simplifies the complex task of setting up and managing models, enabling fast integration with multiple vector databases. Bedrock allowed the chatbot to easily query and update its knowledge base, ensuring that the chatbot's responses were accurate and up-to-date.

Serverless Resources for Scalability:

The solution leveraged serverless technologies to ensure the chatbot could scale dynamically to handle varying user query loads. AWS Lambda functions were used to process the data, update the knowledge base, and handle user queries without the need for managing dedicated infrastructure, ensuring high availability and low latency.

Prompt Updates for Knowledge Base:

Whenever a new document (such as a PDF) was uploaded to Amazon S3, a Lambda function was triggered. This function called AWS Bedrock’s StartIngestionJobCommand, processing the PDF content, extracting relevant data, and storing it in Amazon Aurora using the Titan Embeddings G1 - Textv1.2 embedding model. This seamless process allowed the chatbot’s knowledge base to stay updated with minimal manual intervention.

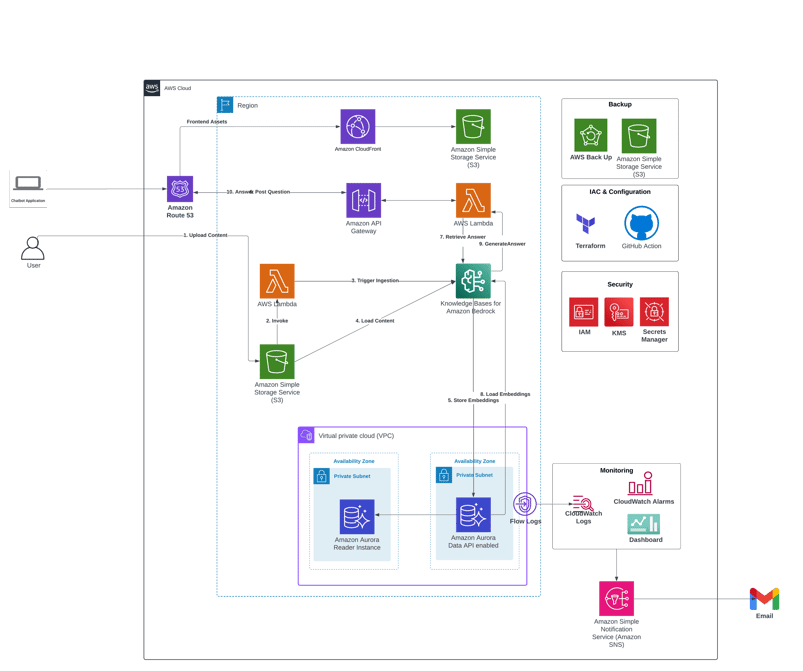

Architecture:

The architecture is designed to be highly scalable and serverless, utilizing several AWS services to automate the deployment and updating of the chatbot’s knowledge base.

Architecture Components:

Amazon S3: Used for storing static assets and PDF documents for ingestion into the chatbot’s knowledge base.

Amazon CloudFront: Serves as a CDN for content delivery, ensuring low latency and fast access to static assets.

AWS Lambda: Processes PDF files, updates the knowledge base, and handles user queries.

Amazon Aurora: Stores vector embeddings for fast querying by the chatbot.

AWS Bedrock: Provides AI models for creating embeddings and querying the knowledge base.

API Gateway: Acts as a bridge to invoke Lambda functions on user POST requests, triggering the chatbot’s response.

Amazon S3 Bucket: Stores PDF documents and data for chatbot ingestion.

Architecture Diagram:

Benefits of the Solution:

Scalability:

The serverless architecture using AWS Lambda and Amazon Aurora ensured that the chatbot could scale automatically based on demand. The solution was designed to handle sudden surges in user requests, such as after a new product launch or service update.

High Availability:

The use of AWS services like Lambda and Aurora ensured that the chatbot would always be available to users. Serverless components automatically scaled to handle traffic fluctuations, ensuring no downtime even during peak periods.

Centralized Knowledge Base:

Amazon Aurora provided a centralized and scalable location for storing vector embeddings of company documents, ensuring that all data was easily accessible and up-to-date.

Quick Knowledge Base Updates:

The automated data ingestion process using AWS Lambda and AWS Bedrock allowed the chatbot’s knowledge base to be updated quickly with new company documents, reducing the risk of outdated information being provided to users.

Faster Deployment:

The Terraform-based solution allowed for quick, consistent, and automated deployment, reducing the traditional deployment time from weeks or months to just 1-2 days.

Cost Estimation:

The approximate cost for running the solution on AWS would include:

Amazon S3: Storage costs for documents and static assets.

AWS Lambda: Charges based on the number of invocations and duration of function execution.

Amazon Aurora: Costs for storing vector embeddings and performing queries.

AWS Bedrock: Charges for using AI models and processing data.

API Gateway: Costs for handling API requests.

Based on these components and the expected usage, the total estimated monthly cost for the solution is around $100/month/Chatbot.

Implementation Guide:

The implementation was automated using Terraform, which provisioned all the necessary AWS resources. The steps included:

Cloning the Repository: Clone the repository containing the Terraform configurations.

git clone <git_repository_url>

Configuring AWS Access: Configure the AWS CLI with the necessary credentials and profile.

aws configure --profile [profile-name]

Deploying the Infrastructure: Use Terraform to deploy the required infrastructure for the chatbot.

terraform init terraform plan terraform apply -var-file=terraform.tfvars

Uploading Documents to S3: Upload company documents to the S3 bucket for ingestion by the chatbot.

aws s3 cp data.pdf s3://<docs_bucket_name>/data.pdf

Conclusion:

The solution successfully created an intelligent, scalable, and secure chatbot using AWS services. By leveraging serverless technologies like AWS Lambda, API Gateway, and Amazon Aurora, the system provided high availability, scalability, and low-latency responses to user queries. The integration of AWS Bedrock and automated knowledge base updates ensured that the chatbot always provided up-to-date and accurate information. The use of Terraform for automated deployment drastically reduced deployment time and ensured consistency across environments.

This approach allows the client to deploy and maintain a highly efficient and intelligent chatbot that meets the business needs of providing accurate, context-driven responses based on internal documents. The automation of infrastructure setup, data ingestion, and knowledge base updates makes the system highly efficient, while also ensuring that the chatbot can scale as business needs grow.

Contact us

Whether you have a request, a query, or want to work with us, use the form below to get in touch with our team.